Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Many media outlets, large and small, both text and video, use this blog as a resource for technical information on mixed reality headsets. Sometimes, they even give credit. In the past two weeks, this blog was prominently cited in YouTube videos by Linus Tech Tips (LTT) and Artur Tech Tales. Less fortunately, Adam Savage’s Tested, hosted by Norman Chen in his Apple Vision Pro Review, used a spreadsheet test pattern from this blog to demonstrate foveated rendering issues.

I will follow up with a discussion of Linus’s Tech Tips video, which deals primarily with human factors. In particular, I want to discuss the “Information Density issue” of virtual versus physical monitors, which the LTT video touched on.

In their “Apple Vision Pro—A PC Guy’s Perspective,” Linus Tech Tips showed several pages from this blog that were nice enough to prominently feature the pages they were using and the web addresses (below). Additionally, I enjoyed their somewhat humorous physical “simulation” of the AVP (more on that in a bit). LTT used images (below-left and below-center) from the blog to explain how the optics distort the display and how the processing in the AVP is used in combination with eye tracking to reduce that distortion. LTT also uses images from the blog (below-right) to show how the field of view (FOV) changes based on the distance from the eye to the optics.

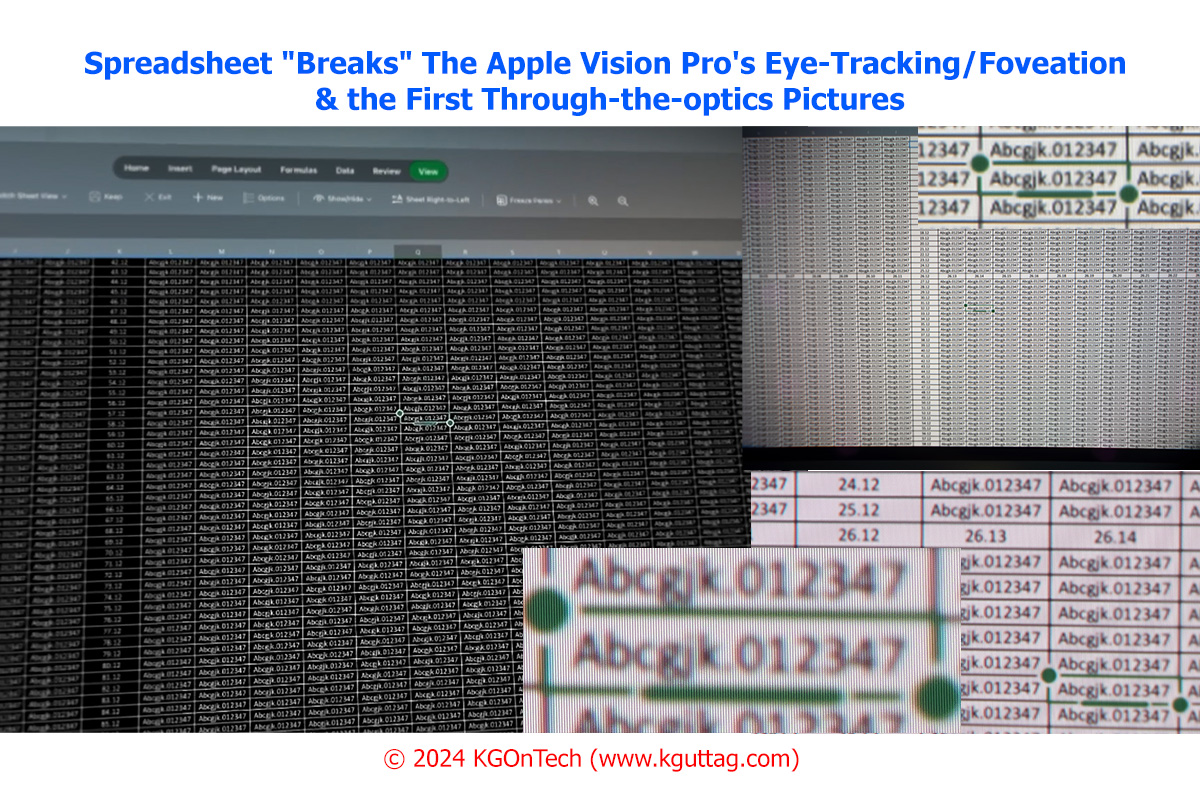

Adam Savage’s Test with host Norman Chan’s review of the Apple Vision Pro used this blog’s AVP-XLS-on-BLACK-Large-Array from Spreadsheet “Breaks” The Apple Vision Pro’s (AVP) Eye-Tracking/Foveation & the First Through-the-optics Pictures to discuss how the foveated boundaries of the Apple Vision Pro are visible. While the spreadsheet is taken from this blog, I didn’t see any references given.

The Adam Savages Tested video either missed or was incorrect on several points it made:

Artur’s Tech Tales Apple Vision Pro OPTICS—Deep Technical Analysis, featuring Arthur Rabner (CEO of Hypervision), includes an interview and presentation by Hypervision’s CEO, Arther Rabner. In his presentation, Rabner mentions this blog several times. The video details the AVP optics and follows up on Hypervision’s white paper discussed in Apple Vision Pro (Part 4) – Hypervision Pancake Optics Analysis.

Much of the Linus Tech Tips (LTT) videos deal with human factors and user interface issues. For the rest of this article, I will discuss and expand upon comments made in the LTT video. Linus also commented on the passthrough camera’s “shutter angle,” but I moved my discussion on that subject to the “Appendix” at the end as it was a bit off-topic and needed some explanation.

At 5:18 in the video, Linus takes the headset off and shows the red marks left by the Apple Vision Pro (left), which I think may have been intentional after Linus complained about issues with the headband earlier. For reference, I have included the marks left by the Apple Vision Pro on my face (below-right). I sometimes joke that I wonder if I wear it long enough, it will make a groove in my skull to help hold up the headset.

An Apple person who is an expert at AVP fitting will probably be able to tell based on the marks on our faces if we have the “wrong” face interface. Linus’s headset makes stronger marks on his cheeks, whereas mine makes the darkest marks on my forehead. As I use inserts, I have a fairly thick (but typical for wearing inserts) 25W face hood with the thinner “W” interface, and AVP’s eye detection often complains that I need to get my eyes closer to the lenses. So, I end up cranking the solo band almost to the point where I feel my pulse on my forehead like a blood pressure measuring cuff (perhaps a health “feature” in the future?).

For virtual reality, Linus is happy with the resolution and placement of virtual objects in the real world. But he stated, “Unfortunately, the whole thing falls apart when you interact with the game.” Linus then goes into the many problems of not having controllers and relying on hand tracking alone.

I’m not a VR gamer, but I agree with The Verge that AVP’s hand and eye tracking is “magic until it’s not.” I am endlessly frustrated with eye-tracking-based finger selection. Even with the headset cranked hard against my face, the eye tracking is unstable even after recalibration of the IPD and eye tracking many times. I consider eye and hand tracking a good “secondary” selection tool that needs an accurate primary selection tool. I have an Apple Magic Pad that “works” with the AVP but does not work in “3-D space.”

Linus discussed using the Steam App on the AVP to play games. He liked that he could get a large image and lay back, but there is some lag, which could be problematic for some games, particularly competitive ones; the resolution is limited to 1080p, and compression artifacts are noticeable.

Linus also discussed using the Sunshine (streaming server on the PC) and Moonlight (remote access on the AVP) apps to mirror Windows PCs. While this combination supports up to 4K at 120p, Linus says you will need an incredibly good wireless access point for the higher resolution and frame rates. In terms of effective resolution and what I like to call “Information Density,” these apps will still suffer the loss of significant resolution due to trying to simulate a virtual monitor in 3-D space, as I have discussed in Apple Vision Pro (Part 5C) – More on Monitor Replacement is Ridiculous and Apple Vision Pro (Part 5A) – Why Monitor Replacement is Ridiculous and shown with through the lens pictures in Apple Vision Pro’s (AVP) Image Quality Issues – First Impressions and Apple Vision Pro’s Optics Blurrier & Lower Contrast than Meta Quest 3.

From a “pro” design perspective, it is rather poor on Apple’s part that the AVP does not support a direct Thunderbolt link for both data and power, while at the same time, it requires a wired battery. I should note that the $300 developer’s strap supports a lowish 100Mbs ethernet (compared to USB-C/Thunderbolt 0.48 to 40 Gbs) speed data through a USB-C connector while still requiring the battery pack for power. There are many unused pins on the developer’s strap, and there are indications in the AVP’s software that the strap might support higher-speed connections (and maybe access to peripherals) in the future.

In terms of video passthrough, at 13:43 in the video, Linus comments about the warping effect of close objects and depth perception being “a bit off.” He also discussed that you are looking at the world through phone-type cameras. When you move your head, the passthrough looks duller, with a significant blur (“Jello”).

The same Linus Tech Tip video also included humorous simulations of the AVP environment with people carrying large-screen monitors. At one point (shown below), they show a person wearing a respirator mask (to “simulate” the headset) surrounded by three very large monitors/TVs. They show how the user has to move their head around to see everything. LTT doesn’t mention that those monitors’ angular resolution is fairly low, which is why those monitors need to be so big.

Linus discussed the AVP’s difficulty sharing documents with others in the same room. Part of this is because the MacBook’s display goes blank when mirroring onto the AVP. Linus discussed how he had to use a “bizarre workaround” of setting up a video conference to share a document with people in the same room.

The most important demonstration in the LTT video involves what I like to call the “Information Density” problem. The AVP, or any VR headset, has low information density when trying to emulate a 2-D physical monitor in 3-D space. It is a fundamental problem; the effective resolution of the AVP well less than half (linearly, less than a quarter two-dimensionally) of the resolution of the monitors that are being simulated (as discussed in Apple Vision Pro (Part 5C) – More on Monitor Replacement is Ridiculous and Apple Vision Pro (Part 5A) and shown with through the lens pictures in Apple Vision Pro’s (AVP) Image Quality Issues – First Impressions and Apple Vision Pro’s Optics Blurrier & Lower Contrast than Meta Quest 3). The key contributors to this issue are:

Below, show the close-up center (best case) through the AVP’s optics on the (left) and the same image at about the same FOV on a computer monitor (right). Things must be blown up about 2x (linearly) to be as legible on the AVP as on a good computer monitor.

Some current issues with monitor simulation are “temporary software issues” that can be improved, but that is not true with the information density problem.

Linus states in the video (at 17:48) that setting up the AVP is a “bit of a chore,” but it should be understood most of the “chore” is due to current software limitations that could be fixed with better software. The most obvious problems, as identified by Linus, are that the AVP does not currently support multiple screens from a MacBook, and it does not save the virtual screen location of the MacBook. I think most people expect Apple to fix these problems at some point in the near future.

At 18:20, Linus showed the real multiple-monitor workspace of someone doing video editing (see below). While a bit extreme for some people with two vertically stacked 4K monitors in landscape orientation monitors and a third 4K monitor in portrait mode, it is not that far off what I have been using for over a decade with two large side-by-side monitors (today I have a 34″ 22:9 1440p “center monitor” and a 28″ 4K side monitor both in landscape mode).

I want to note a comment made by Linus (with my bold emphasis):

“Vision Pro Sounds like having your own personal Colin holding a TV for you and then allowing it to be repositioned and float effortlessly wherever you want. But in practice, I just don’t really often need to do that, and neither do a lot of people. For example, Nicole, here’s a real person doing real work [and] for a fraction of the cost of a Vision Pro, she has multiple 4K displays all within her field of view at once, and this is how much she has to move her head in order to look between them. Wow.

Again, I appreciate this thing for the technological Marvel that it is—a 4K display in a single Square inch. But for optimal text clarity, you need to use most of those pixels, meaning that the virtual monitor needs to be absolutely massive for the Vision Pro to really shine.“

The bold highlights above make the point about information density. A person can see all the information all at once and then, with minimal eye and head movement, see the specific information they want to see at that moment. Making text bigger only “works” for small amounts of content as it makes reading slower with larger head and eye movement and will tend to make the eyes more tired with movement over wider angles.

To drive the point home, the LTT video “simulates” an AVP desktop, assuming multiple monitor support but physically placing three very large monitors side by side with two smaller displays on top. They had the simulated user wear a paint respirator mask to “simulate” the headset (and likely for comic effect). I would like to add that each of those large monitors, even at that size, with the AVP, will have the resolution capability of more like a 1920x1080p monitor or about half linearly and one-fourth in area, the content of a 4K monitor.

Quoting Linus about this part of the video (with my bold emphasis):

It’s more like having a much larger TV that is quite a bit farther away, and that is a good thing in the sense that you’ll be focusing more than a few feet in front of you. But I still found that in spite of this, that it was a big problem for me if I spent more than an hour or so in spatial-computing-land.

Making this productivity problem worse is the fact that, at this time, the Vision Pro doesn’t allow you to save your layouts. So every time you want to get back into it, you’ve got to put it on, authenticate, connect to your MacBook, resize that display, open a safari window, put that over there where you want it, maybe your emails go over here, it’s a lot of friction that our editors, for example, don’t go through every time they want to sit down and get a couple hours of work done before their eyes and face hurt too much to continue.

I would classify Many of the issues Linus gave in the above quote as solvable in software for the AVP. What is not likely solvable in software are headaches, eye strain, and low angular resolution of the AVP relative to a modern computer monitor in a typical setup.

While speaking in the Los Angeles area at the SID LA One Day conference, I stopped in a Bigscreen Beyond to try out their headset for about three hours. I could wear the Bigscreen Beyond for almost three hours, where typically, I get a spitting headache with the AVP after about 40 minutes. I don’t know why, but it is likely a combination of much less pressure on my forehead and something to do with the optics. Whatever it is, there is clearly a big difference to me. It was also much easier to drink from a can (right) with the Bigscreen’s much-reduced headset.

It is gratifying to see the blog’s work reach a wide audience worldwide (about 50% of this blog’s audience is outside the USA). As a result of other media outlets picking up this blog’s work, the readership roughly doubled last month to about 50,000 (Google Analytics “Users”).

I particularly appreciated the Linus Tech Tip example of a real workspace in contrast to their “simulation” of the AVP workspace. It helps illustrate some human factor issues with having a headset simulate a computer monitor, including information density. I keep pounding on the Information Density issue because it seems underappreciated by many of the media reports on the AVP.

I moved this discussion to this Appendix because it involves some technical discussion that, while it may be important, may not be of interest to everyone and takes some time to explain. At the same time, I didn’t want to ignore it as it brings up a potential issue with the AVP.

At about 16:30 in the LTT Video, Linus also states that the Apple Vision Pro cameras use “weird shutter angles to compensate for the flickering of lights around you, causing them [the AVP] to crank up the ISO [sensitivity], adding a bunch of noise to the image.”

For those that don’t know, “shutter angle” (see also https://www.digitalcameraworld.com/features/cheat-sheet-shutter-angles-vs-shutter-speeds) is a hold-over term from the days of mechanical movie shutters where the shutter was open for a percentage of a 360-degree rotating shutter (right). Still, it is now applied to camera shutters, including “electronic shutters” (many large mirrorless cameras have mechanical and electronic shutter options with different effects). A 180-degree shutter angle means the shutter/camera scanning is open one-half the frame time, say 1/48th of a 1/24th of a second frame time or 1/180th of a 1/90th of a second frame rate. Typically, people talk about how different shutter angles affect the choppiness of motion and motion blur, not brightness or ISO, even though it does affect ISO/Brightness due to the change in exposure time.

I’m not sure why Linus is saying that certain lights are reducing the shutter angle, thus increasing ISO, unless he is saying that the shutter time is being reduced with certain types of light (or simply bright lights) or with certain types of flickering lights the cameras are missing much of the light. If so, it is a roundabout way of discussing the camera issue; as discussed above, the term shutter angle is typically used in the context of motion effects, with brightness/ISO being more of a side issue.

A related temporal issue is the duty cycle of the displays (as opposed to the passthrough cameras), which has a similar “shutter angle” issue. VR users have found that displays with long on-time duty cycles cause perceived blurriness with rapid head movement. Thus, they tend to prefer display technologies with low-duty cycles. However, low display duty cycles typically result in less display brightness. LED backlit LCDs can drive the LEDs harder for shorter periods to help make up for the brightness loss. However, OLED microdisplays commonly have relatively long (sometimes 100%) on-time duty cycles. I have not yet had a chance to check the duty cycle of the AVP, but it is one of the things on my to-do list. In light of Linus’s comments, I will want to set up some experiments to check out the temporal behavior of the AVP’s passthrough camera.

I think he’s referring to the variable refresh rates of 90/96/100, but that wouldn’t really be shutter angle. I do find AVP works tremendously better in indirect indoor sunlight.

Thanks, I somewhat think Linus has a “different” definition of shutter angle. I can’t see how changing the refresh rate by about 10% would affect the brightness that much. I’m guessing what Linus might be seeing is that he can’t get the cameras to work better by cranking up the studio lighting, but I would hope that good studio lighting does not use pulse width modulation as it would mess all all kinds of cameras with rolling shutters and not just the AVP.

Any information on the infra red sensors used for eye and hand tracking plus Optic ID?

I haven’t done anything about them. I’m primarily looking at image and optics. I asked iFixit about them an they said the cameras didn’t have easily identifiable markings.

Based on how the eye tracking behaves with my eye (which has a slightly distorted cornea) which is erratic versus the camera, which seems pretty steady, it looks like the eye tracking is looking for the “glint” on the cornea. Since they are using it for I.D., they maybe are doing a bit better by looking a the Iris. Either way, the eye tracking while good is not great in my experience.

Perhaps STMicroelectronics tech is in play? They have a long history with Apple and were working with them on enhanced sensors using Quantum dots some years back (e.g. 2018/19). Does Optic ID use a higher eye safe infrared frequency above 1000nm?

I have not heard anything about STMicroelectronics, but I can check around. I also don’t know what IR frequency the Optics ID is using.

Yes you likely have the wrong interface (and so does linus), the solo band wheel should not needs to be clicked to more than a couple of clicks beyond it just started to grip. took me 7 tries at a light seal to find this to be true. I had zeiss lenses i went from 25W to 13W. I suspect you nee a 3 as you middle number (closer) or wider (first number) as if the lightseal is too narrow it maybe too far forward on your head. It should rest equally on your cheeks and forehead, it should not slip down with solo gently tight, and it absolutely should not rest on the bridge of your nose.

Thanks, Apple appears to be generous in letting me swap for a 23W even though I am out of their normal exchange period. If you think about it, the shorter return period punishes people that don’t return things at a drop of the hat.

While I appreciate that Apple is being flexible with their exchange policy, it is a very poor design concept for something going to many thousands of people. Only Apple could get away with having so many different interfaces. They might as well go the route of Big Screen Beyond and scan the face and generate a custom insert per person (which is impractical for a large market). Or better yet, develop a design that is more adaptable — a better design, even it somewhat more expensive, would save a lot of support and returns.

“It makes a mess of your face” –

I think this is product recall territory. Most every person I’ve seen wear this gets these red rashy rings.

It’s not just pressure, its actively irritated skin. I have 4 of the apple facial rings I swap between and clean fwiw.

There is something wrong with the foam in the mask I believe perhaps similar to –

https://www.meta.com/quest/quest-2-facial-interface-recall/

I added a $10 quest2 silicon mask skin to my Apple Vision Pro and its makes all the difference.

The pressure is there but I don’t the weird itchy irritation and no red ring. It’s quite suggestive to me, how much more comfortable it is.

Thanks, I tried and improvised interface with a microfiber cloth covering the face seal. I don’t see a lot of difference. I have ordered one of the “silicon skins to see if it helps when it arrives (expected tomorrow).

I also got my 23W today which is about 5mm shorter than the 25W which I had. It seems to allow me to apply less pressure to get the eye tracking to work and it seems like the eye tracking works better (subjectively at least). But still after about 30 minutes and with the microfiber cloth interface my face still is getting red. I don’t feel any itching.

Thanks,

While there might be some irritation some people, in my case, I think it is more of a pressure issue.

I got a silicon face pad cover (for a Quest 3 for about $12 on Amazon) today and a 23W light shield (for free exchange from Apple). I find the biggest improvement is that the combination greatly reduces the amount of pressure require to hold the mask on with the solo band. The 23W (about 5 millimeters shorter keeps me from having to tighten the band for eye tracking) and the silicon mask (which is about 1mm thick) adds a lot of grip being a little spongy and adding surface area for gripping. I barely have to turn the knob on the sold band to keep the headset in place.

It still leave a small mark on my forehead after about a half hour, but it is less and overall the headset is more comfortable to wear. It is letting me separate the pain from eye strain (which I still have) from the pain of pressure on my head (which seems less, at least in one session of use).

Yes, the facial interface makes a lot of difference. I went from 25W to 33W and then to 23W, and it’s been more comfortable to wear. Also, when using a solo band, you need to move it up and down your head to equally spread the pressure between your forehead and cheeks. And it doesn’t have to be super tight, just tight enough to stay on your face.

I can wear mine for 2-3 hours. I do get the marks, but they disappear within a minute or so.

Despite all the gen 1 product issues and a relatively high friction to start using it (external battery, hold it a certain way to put it on, etc), it’s still the first XR device that I can actually use for productivity.

While it’s not as sharp as my 4K 32″ screen, it’s good enough. But the biggest benefit is reduced eyestrain for me. First time in my life I do not get double vision after working at my computer for a few hours. This alone worth the price for me. YMMV, of course, as all people are different. I also like better sitting posture when not at my desk, and I don’t mind turning my head a bit more than working with my normal display. Exercises the neck and makes it less stiff. Plus as a glasses wearer with significant difference between the eyes and astigmatism, I have to turn my head slightly even when working with my single 32″ monitor anyway.

Thanks, I got a silicon face pad cover (for a Quest 3 for about $12 on Amazon) today and a 23W light shield (for free exchange from Apple). I find the biggest improvement is that the combination greatly reduces the amount of pressure require to hold the mask on with the solo band. The 23W (about 5 millimeters shorter keeps me from having to tighten the band for eye tracking) and the silicon mask (which is about 1mm thick) adds a lot of grip being a bit spongy and adding surface area.

As you stated, it still leave a mark after wearing for a half hour or more but not as severe. The overall comfort is significantly better.

I don’t agree on the eyestrain. My eyes start hurting after about 30 minutes of use and by 60 minutes, I am relieved to take it off. In terms of functionality, I very much agree with the Linus video, and as stated in the article, that there is much less eye and head movement required in with a physical monitor. There is no way I would prefer the AVP over my two monitor setup (a 34″ 22:9 3840×1440 and 28″ 4K – both are the same height) in terms of interacting with the monitor. People will naturally start turning their head on any content outside of about 30 degrees (varies from person to person).

typo? “LED backlit LEDs” -> LCDs?

Thanks, I just fixed it.

“While this combination supports up to 4K at 120p”

I would assume it is supposed to say at 120 Hz.

I got here from TechAltar’s recent video about VR, even though I have watched the LTT video referenced here, lol.

Anyway interesting details you got on about AVP/VR on this site.